深度学习网络在过去几年中获得了巨大的普及。“注意力机制”被集成到深度学习网络中以提高其性能。向网络添加注意力组件已在机器翻译、图像识别、文本摘要等任务中显示出显著的改进。

本教程展示了如何将自定义注意力层添加到使用循环神经网络构建的网络中。我们将通过一个非常简单的数据集来说明时间序列预测的端到端应用。本教程适合任何希望对如何向深度学习网络添加用户定义层有一个基本了解,并利用这个简单示例构建更复杂应用的人。

完成本教程后,您将了解:

- 创建 Keras 自定义注意力层所需的方法

- 如何在使用 SimpleRNN 构建的网络中加入新层

通过我的书开始您的项目 《Transformer 模型与注意力机制》。它提供了自学教程和工作代码,指导您构建一个功能齐全的 Transformer 模型,该模型可以

将句子从一种语言翻译成另一种语言的完整 Transformer 模型...

让我们开始吧。

教程概述

本教程分为三个部分;它们是:

- 为时间序列预测准备简单数据集

- 如何使用 SimpleRNN 构建的网络进行时间序列预测

- 向 SimpleRNN 网络添加自定义注意力层

先决条件

假设您熟悉以下主题。您可以点击下面的链接进行概览。

数据集

本文的重点是掌握如何向深度学习网络构建自定义注意力层。为此,我们将使用一个非常简单的斐波那契数列示例,其中一个数字由前两个数字构成。数列的前 10 个数字如下所示:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, …

给定前“t”个数字,您能否让机器准确地重建下一个数字?这意味着要忽略所有之前的输入,只保留最后两个,并对最后两个数字执行正确的运算。

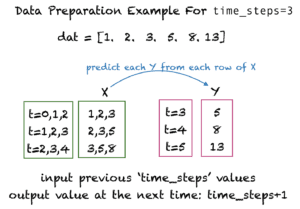

在本教程中,您将从 t 个时间步构建训练样本,并将 t+1 时刻的值作为目标。例如,如果 t=3,则训练样本和相应的目标值如下所示:

想开始构建带有注意力的 Transformer 模型吗?

立即参加我的免费12天电子邮件速成课程(含示例代码)。

点击注册,同时获得该课程的免费PDF电子书版本。

SimpleRNN 网络

在本节中,您将编写生成数据集并使用 SimpleRNN 网络预测斐波那契数列下一个数字的基本代码。

导入部分

首先,我们写下导入部分:

|

1 2 3 4 5 6 7 8 9 |

from pandas import read_csv import numpy as np from keras import Model from keras.layers import Layer import keras.backend as K from keras.layers import Input, Dense, SimpleRNN 从 sklearn.预处理 导入 MinMaxScaler from keras.models import Sequential from keras.metrics import mean_squared_error |

准备数据集

下面的函数生成一个包含 n 个斐波那契数列的序列(不包括起始的两个值)。如果 scale_data 设置为 True,它还会使用 scikit-learn 的 MinMaxScaler 将值缩放到 0 和 1 之间。让我们看看 n=10 的输出。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

def get_fib_seq(n, scale_data=True): # 获取斐波那契数列 seq = np.zeros(n) fib_n1 = 0.0 fib_n = 1.0 for i in range(n): seq[i] = fib_n1 + fib_n fib_n1 = fib_n fib_n = seq[i] scaler = [] if scale_data: scaler = MinMaxScaler(feature_range=(0, 1)) seq = np.reshape(seq, (n, 1)) seq = scaler.fit_transform(seq).flatten() return seq, scaler fib_seq = get_fib_seq(10, False)[0] print(fib_seq) |

|

1 |

[ 1. 2. 3. 5. 8. 13. 21. 34. 55. 89.] |

接下来,我们需要一个函数 get_fib_XY(),它将序列重新格式化为 Keras 输入层使用的训练样本和目标值。当给定 time_steps 作为参数时,get_fib_XY() 会用 time_steps 列构建数据集的每一行。此函数不仅从斐波那契数列构建训练集和测试集,还会对训练样本进行混洗,并将其重塑为所需的 TensorFlow 格式,即 total_samples x time_steps x features。此外,如果 scale_data 设置为 True,该函数还将返回缩放值的 scaler 对象。

让我们生成一个小的训练集来查看其外观。我们将 time_steps 设置为 3,total_fib_numbers 设置为 12,大约 70% 的样本用于测试点。请注意,训练和测试样本已通过 permutation() 函数进行了混洗。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

def get_fib_XY(total_fib_numbers, time_steps, train_percent, scale_data=True): dat, scaler = get_fib_seq(total_fib_numbers, scale_data) Y_ind = np.arange(time_steps, len(dat), 1) Y = dat[Y_ind] rows_x = len(Y) X = dat[0:rows_x] for i in range(time_steps-1): temp = dat[i+1:rows_x+i+1] X = np.column_stack((X, temp)) # 随机置换,固定种子 rand = np.random.RandomState(seed=13) idx = rand.permutation(rows_x) split = int(train_percent*rows_x) train_ind = idx[0:split] test_ind = idx[split:] trainX = X[train_ind] trainY = Y[train_ind] testX = X[test_ind] testY = Y[test_ind] trainX = np.reshape(trainX, (len(trainX), time_steps, 1)) testX = np.reshape(testX, (len(testX), time_steps, 1)) return trainX, trainY, testX, testY, scaler trainX, trainY, testX, testY, scaler = get_fib_XY(12, 3, 0.7, False) print('trainX = ', trainX) print('trainY = ', trainY) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

trainX = [[[ 8.] [13.] [21.]] [[ 5.] [ 8.] [13.]] [[ 2.] [ 3.] [ 5.]] [[13.] [21.] [34.]] [[21.] [34.] [55.]] [[34.] [55.] [89.]]] trainY = [ 34. 21. 8. 55. 89. 144.] |

设置网络

现在,我们来设置一个包含两个层的小型网络。第一个是 SimpleRNN 层,第二个是 Dense 层。下面是模型的摘要。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# 设置参数 time_steps = 20 hidden_units = 2 epochs = 30 # 创建传统的 RNN 网络 def create_RNN(hidden_units, dense_units, input_shape, activation): model = Sequential() model.add(SimpleRNN(hidden_units, input_shape=input_shape, activation=activation[0])) model.add(Dense(units=dense_units, activation=activation[1])) model.compile(loss='mse', optimizer='adam') 返回 model model_RNN = create_RNN(hidden_units=hidden_units, dense_units=1, input_shape=(time_steps,1), activation=['tanh', 'tanh']) model_RNN.summary() |

|

1 2 3 4 5 6 7 8 9 10 11 |

Model: "sequential_1" _________________________________________________________________ 层 (类型) 输出形状 参数数量 ================================================================= simple_rnn_3 (SimpleRNN) (None, 2) 8 _________________________________________________________________ dense_3 (Dense) (None, 1) 3 ================================================================= Total params: 11 Trainable params: 11 不可训练参数: 0 |

训练并评估网络

下一步是添加生成数据集、训练网络并进行评估的代码。这次,我们将数据缩放到 0 到 1 之间。我们不需要传递 scale_data 参数,因为它的默认值是 True。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# 生成数据集 trainX, trainY, testX, testY, scaler = get_fib_XY(1200, time_steps, 0.7) model_RNN.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2) # 评估模型 train_mse = model_RNN.evaluate(trainX, trainY) test_mse = model_RNN.evaluate(testX, testY) # 打印误差 print("Train set MSE = ", train_mse) print("Test set MSE = ", test_mse) |

输出结果是训练的进度以及以下均方误差的值:

|

1 2 |

Train set MSE = 5.631405292660929e-05 Test set MSE = 2.623497312015388e-05 |

向网络添加自定义注意力层

在 Keras 中,通过继承 Layer 类可以轻松创建实现注意力机制的自定义层。Keras 指南列出了 通过子类化创建新层的清晰步骤。您将在此处使用这些指南。与单个层对应的所有权重和偏差都封装在此类中。您需要编写 __init__ 方法,并覆盖以下方法:

build():Keras 指南建议在已知输入尺寸后在此方法中添加权重。此方法“惰性”地创建权重。内置函数add_weight()可用于添加注意力层的权重和偏差。call():call()方法实现输入到输出的映射。它应在训练期间实现前向传播。

注意力层的 Call 方法

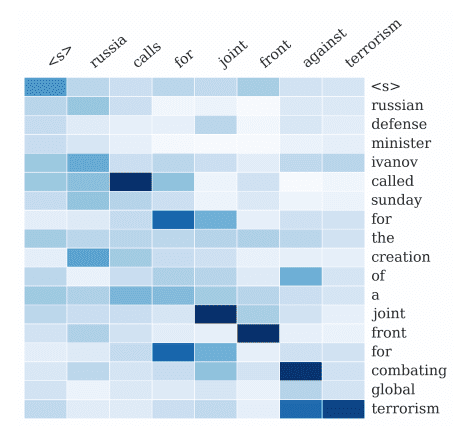

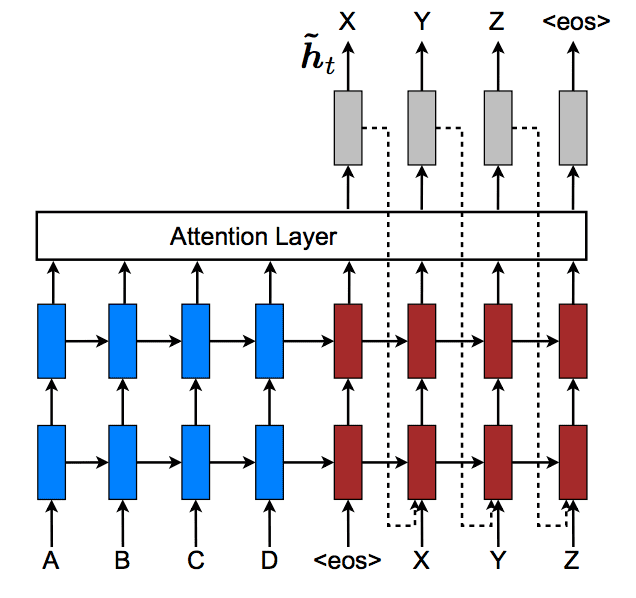

注意力层的 Call 方法必须计算对齐分数、权重和上下文。您可以在 Stefania 关于 从头开始的注意力机制的出色文章中找到这些参数的详细信息。您将在 call() 方法中实现 Bahdanau 注意力。

从 Keras Layer 类继承层并通过 add_weights() 方法添加权重的好处是,权重会自动调整。Keras 会对 call() 方法的操作/计算进行“逆向工程”,并在训练期间计算梯度。在添加权重时,指定 trainable=True 非常重要。您也可以为自定义层添加 train_step() 方法,并在需要时指定自己的权重训练方法。

下面的代码实现了自定义注意力层。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# 将注意力层添加到深度学习网络 class attention(Layer): def __init__(self,**kwargs): super(attention,self).__init__(**kwargs) def build(self,input_shape): self.W=self.add_weight(name='attention_weight', shape=(input_shape[-1],1), initializer='random_normal', trainable=True) self.b=self.add_weight(name='attention_bias', shape=(input_shape[1],1), initializer='zeros', trainable=True) super(attention, self).build(input_shape) def call(self,x): # 对齐分数。通过 tanh 函数传递 e = K.tanh(K.dot(x,self.W)+self.b) # 移除大小为 1 的维度 e = K.squeeze(e, axis=-1) # 计算权重 alpha = K.softmax(e) # 重塑为 TensorFlow 格式 alpha = K.expand_dims(alpha, axis=-1) # 计算上下文向量 context = x * alpha context = K.sum(context, axis=1) return context |

带注意力层的 RNN 网络

现在,我们向前面创建的 RNN 网络添加一个注意力层。create_RNN_with_attention() 函数现在在网络中指定了一个 RNN 层、一个注意力层和一个 Dense 层。确保在指定 SimpleRNN 时将 return_sequences=True。这将返回所有先前时间步的隐藏单元的输出。

让我们看一下带注意力层的模型的摘要。

|

1 2 3 4 5 6 7 8 9 10 11 12 |

def create_RNN_with_attention(hidden_units, dense_units, input_shape, activation): x=Input(shape=input_shape) RNN_layer = SimpleRNN(hidden_units, return_sequences=True, activation=activation)(x) attention_layer = attention()(RNN_layer) outputs=Dense(dense_units, trainable=True, activation=activation)(attention_layer) model=Model(x,outputs) model.compile(loss='mse', optimizer='adam') return model model_attention = create_RNN_with_attention(hidden_units=hidden_units, dense_units=1, input_shape=(time_steps,1), activation='tanh') model_attention.summary() |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

Model: "model_1" _________________________________________________________________ 层 (类型) 输出形状 参数数量 ================================================================= input_2 (InputLayer) [(None, 20, 1)] 0 _________________________________________________________________ simple_rnn_2 (SimpleRNN) (None, 20, 2) 8 _________________________________________________________________ attention_1 (attention) (None, 2) 22 _________________________________________________________________ dense_2 (Dense) (None, 1) 3 ================================================================= Total params: 33 Trainable params: 33 不可训练参数: 0 _________________________________________________________________ |

Train and Evaluate the Deep Learning Network with Attention

It’s time to train and test your model and see how it performs in predicting the next Fibonacci number of a sequence.

|

1 2 3 4 5 6 7 8 9 |

model_attention.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2) # 评估模型 train_mse_attn = model_attention.evaluate(trainX, trainY) test_mse_attn = model_attention.evaluate(testX, testY) # 打印误差 print("Train set MSE with attention = ", train_mse_attn) print("Test set MSE with attention = ", test_mse_attn) |

You’ll see the training progress as output and the following

|

1 2 |

Train set MSE with attention = 5.3511179430643097e-05 Test set MSE with attention = 9.053358553501312e-06 |

You can see that even for this simple example, the mean square error on the test set is lower with the attention layer. You can achieve better results with hyper-parameter tuning and model selection. Try this out on more complex problems and by adding more layers to the network. You can also use the scaler object to scale the numbers back to their original values.

You can take this example one step further by using LSTM instead of SimpleRNN, or you can build a network via convolution and pooling layers. You can also change this to an encoder-decoder network if you like.

合并代码

The entire code for this tutorial is pasted below if you would like to try it. Note that your outputs would be different from the ones given in this tutorial because of the stochastic nature of this algorithm.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 |

from pandas import read_csv import numpy as np from keras import Model from keras.layers import Layer import keras.backend as K from keras.layers import Input, Dense, SimpleRNN 从 sklearn.预处理 导入 MinMaxScaler from keras.models import Sequential from keras.metrics import mean_squared_error # Prepare data def get_fib_seq(n, scale_data=True): # 获取斐波那契数列 seq = np.zeros(n) fib_n1 = 0.0 fib_n = 1.0 for i in range(n): seq[i] = fib_n1 + fib_n fib_n1 = fib_n fib_n = seq[i] scaler = [] if scale_data: scaler = MinMaxScaler(feature_range=(0, 1)) seq = np.reshape(seq, (n, 1)) seq = scaler.fit_transform(seq).flatten() return seq, scaler def get_fib_XY(total_fib_numbers, time_steps, train_percent, scale_data=True): dat, scaler = get_fib_seq(total_fib_numbers, scale_data) Y_ind = np.arange(time_steps, len(dat), 1) Y = dat[Y_ind] rows_x = len(Y) X = dat[0:rows_x] for i in range(time_steps-1): temp = dat[i+1:rows_x+i+1] X = np.column_stack((X, temp)) # 随机置换,固定种子 rand = np.random.RandomState(seed=13) idx = rand.permutation(rows_x) split = int(train_percent*rows_x) train_ind = idx[0:split] test_ind = idx[split:] trainX = X[train_ind] trainY = Y[train_ind] testX = X[test_ind] testY = Y[test_ind] trainX = np.reshape(trainX, (len(trainX), time_steps, 1)) testX = np.reshape(testX, (len(testX), time_steps, 1)) return trainX, trainY, testX, testY, scaler # 设置参数 time_steps = 20 hidden_units = 2 epochs = 30 # 创建传统的 RNN 网络 def create_RNN(hidden_units, dense_units, input_shape, activation): model = Sequential() model.add(SimpleRNN(hidden_units, input_shape=input_shape, activation=activation[0])) model.add(Dense(units=dense_units, activation=activation[1])) model.compile(loss='mse', optimizer='adam') 返回 model model_RNN = create_RNN(hidden_units=hidden_units, dense_units=1, input_shape=(time_steps,1), activation=['tanh', 'tanh']) # Generate the dataset for the network trainX, trainY, testX, testY, scaler = get_fib_XY(1200, time_steps, 0.7) # Train the network model_RNN.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2) # 评估模型 train_mse = model_RNN.evaluate(trainX, trainY) test_mse = model_RNN.evaluate(testX, testY) # 打印误差 print("Train set MSE = ", train_mse) print("Test set MSE = ", test_mse) # 将注意力层添加到深度学习网络 class attention(Layer): def __init__(self,**kwargs): super(attention,self).__init__(**kwargs) def build(self,input_shape): self.W=self.add_weight(name='attention_weight', shape=(input_shape[-1],1), initializer='random_normal', trainable=True) self.b=self.add_weight(name='attention_bias', shape=(input_shape[1],1), initializer='zeros', trainable=True) super(attention, self).build(input_shape) def call(self,x): # 对齐分数。通过 tanh 函数传递 e = K.tanh(K.dot(x,self.W)+self.b) # 移除大小为 1 的维度 e = K.squeeze(e, axis=-1) # 计算权重 alpha = K.softmax(e) # 重塑为 TensorFlow 格式 alpha = K.expand_dims(alpha, axis=-1) # 计算上下文向量 context = x * alpha context = K.sum(context, axis=1) return context def create_RNN_with_attention(hidden_units, dense_units, input_shape, activation): x=Input(shape=input_shape) RNN_layer = SimpleRNN(hidden_units, return_sequences=True, activation=activation)(x) attention_layer = attention()(RNN_layer) outputs=Dense(dense_units, trainable=True, activation=activation)(attention_layer) model=Model(x,outputs) model.compile(loss='mse', optimizer='adam') return model # Create the model with attention, train and evaluate model_attention = create_RNN_with_attention(hidden_units=hidden_units, dense_units=1, input_shape=(time_steps,1), activation='tanh') model_attention.summary() model_attention.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2) # 评估模型 train_mse_attn = model_attention.evaluate(trainX, trainY) test_mse_attn = model_attention.evaluate(testX, testY) # 打印误差 print("Train set MSE with attention = ", train_mse_attn) print("Test set MSE with attention = ", test_mse_attn) |

进一步阅读

如果您想深入了解,本节提供了更多关于该主题的资源。

书籍

- Deep Learning Essentials by Wei Di, Anurag Bhardwaj, and Jianing Wei.

- Deep Learning by Ian Goodfellow, Joshua Bengio, and Aaron Courville.

论文

- 通过联合学习对齐和翻译的神经机器翻译, 2014.

文章

- A Tour of Recurrent Neural Network Algorithms for Deep Learning.

- 什么是注意力机制?

- The attention mechanism from scratch.

- An introduction to RNN and the math that powers them.

- Understanding simple recurrent neural networks in Keras.

- 如何在Keras中开发带注意力机制的编码器-解码器模型

总结

In this tutorial, you discovered how to add a custom attention layer to a deep learning network using Keras.

具体来说,你学到了:

- How to override the Keras

Layerclass. - The method

build()is required to add weights to the attention layer. - The

call()method is required for specifying the mapping of inputs to outputs of the attention layer. - How to add a custom attention layer to the deep learning network built using SimpleRNN.

Do you have any questions about RNNs discussed in this post? Ask your questions in the comments below, and I will do my best to answer.

Hi,I have a question

I have tried to use LSTM instead of simple_RNN with your help.

then I found it only has train loss, I can not find the val_loss.

So how can I monitor the overfitting problem?

I would like to ask you for help.

非常感谢!

Likely you didn’t provide validation data when you called fit(), hence no validation has been performed. See this code snippet

history = model.fit(X_train, y_train, epochs=200, batch_size=16, validation_data=(X_test,y_test))

Could you tell me how to monitor the overfitting problem with your code?

Or is it that RNN models with attention mechanism do not need to consider this overfitting problem?

I don’t see any tuning hyperparameters involved in your example.

I have fixed the problem

谢谢!

I am getting the following error : “NameError: name ‘Layer’ is not defined”

Do you have “from keras.layers import Layer”?

Well structured and well described with clarity.

Thanks for the clarity explanation and example.

I have some different result when executing the code above.

The attention layer’s output should be (None, 2) above.

However, I get (None, 20, 2) and cause dimensions doesn’t match error.

The attention layer does output the (None, 2)

But when it was concatenated to model it becomes (None, 20, 2)

Could you please tell me what’s the problem?

谢谢你。

It is hard to tell what’s wrong. Can you try to copy over the example code at the end of this post and compare with your version?

感谢您的回复。

I just copy the code at the end and execute it on my PC.

The error is below

ValueError: Error when checking target: expected dense_2 to have 3 dimensions, but got array with shape (826, 1)

It seems that the attention layer return the sequence?

I just verified and don’t see the error. Did you see which line is triggering that?

Getting same error. I don’t know but the Dense layer is expecting 3 values, but it is getting 2. I try to use Flatten before Dense, i think it is also not working.

Below is the line of code.

ValueError: Error when checking target: expected dense_2 to have 3 dimensions, but got array with shape (826, 1)

–> 123 model_attention.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2)

My python version is 3.7.3

Keras 2.3.1

tensorflow 2.2.0

谢谢您的回复。

I just use the colab to run the code and get the right result.

It may caused by environment.

But I’m not sure which part is wrong.

Anyway, thank you for the very useful guide on attention layer.

It’s really helpful.

In a seq2seq model trained for time series forecasting and having a 3-stack LSTM encoder plus a similar decoder, would the following approach be reasonable?

1) Calculate the attention scores after the last encoder LSTM.

2) Condition the first decoder LSTM with attention outputs (initialize LSTM states from context vector).

I have the same question…

I also meet the error “ValueError: Error when checking target: expected dense_9 to have 3 dimensions, but got array with shape (826, 1)” in the line”model_attention.fit(trainX, trainY, epochs=epochs, batch_size=1, verbose=2)”, do you have any suggestion? Thank you.

After the “model_attention.summary()”, the Output Shape of the attention layer and the dense layer is “(None, 20,2), (None,20,1)”, which is different from what you present in this blog “(None 2) and (None, 1) “, is there something wrong?

I solved this problem by upgrading the version of Tensorflow from 1.14.0 to 2.7.0

Tensorflow 1.x is too old to use nowadays. The recent tutorials on this blog are all checked against the 2.x version while the older posts may need revision.

Reply to myself.

I run the code on colab and the result is the same as the author.

I think that might be caused by environment. QAQ

Thanks! That’s a good way to check. Usually for python libraries, you can print “libraryname.__version__” to check the version. That helps to identify when one machine report a different result than another.

I ran your code on my machine but it produces different summary for SimpleRNN+Attention network. The output of attention layer in my summary is

attention_1 (attention) (None,20, 2) 22

This produces an error for dense layer. I don’t know where is the problem!

Hi Nav…Hopefully the issue is now resolved with reinstallation of TensorFlow.

此致,

Resolved the issue by upgrading tensorflow.

Thank you so much for this amazing tutorial!

You are very welcome Nav!

此致,

Hi, thank you for the post.

For the class attention(Layer), why is there only one weight vector for the alignment score (e)?

In Bahdanau et al’s paper (https://arxiv.org/pdf/1409.0473.pdf), I see that the alighment model has three weights, v_a, W_a, and U_a.

Furthermore, can you clarify if attention mechanisms are appropriate for non-autoencoder architectures?

再次感谢。

Hi Ray…The following may be of interest to you

https://machinelearning.org.cn/the-transformer-attention-mechanism/

I noticed Keras has an attention layer implementation. Do you have any plans to use the keras attention layer implementation as an example in one of your blogs? Thank you.

https://keras.org.cn/api/layers/attention_layers/attention/

嗨!

It is my understanding that attention is a way to decrease information in matrixes that are less semantic by multiplying them with a scalar value between 0-1.

In you case, you’re only left with one time-step (the time_step dimension is lost after attention layer).

而不是

_________________________________________________________________

层(类型) 输出形状 参数 #

=================================================================

input_2 (InputLayer) [(None, 20, 1)] 0

_________________________________________________________________

simple_rnn_2 (SimpleRNN) (None, 20, 2) 8

_________________________________________________________________

attention_1 (attention) (None, 2) 22

_________________________________________________________________

dense_2 (Dense) (None, 1) 3

=================================================================

shouldn’t the attions yeild these shapes

_________________________________________________________________

层(类型) 输出形状 参数 #

=================================================================

input_2 (InputLayer) [(None, 20, 1)] 0

_________________________________________________________________

simple_rnn_2 (SimpleRNN) (None, 20, 2) 8

_________________________________________________________________

attention_1 (attention) (None, 20, 2) ???

_________________________________________________________________

dense_2 (Dense) (None, 20, 1) ???

=================================================================

To make compare with a NLP subject. Shouldn’t you keep the whole sentence and multiply irrelevant words with a scalar value close to 0 instead of just choosing the most semantic word?

Hi Martin…Since semantics are the ultimate goal of NLP, I would recommend choosing the most semantic word.

你好,

Thanks for the implementation of the custom attention layer.

Here I want to print the probability values or the alpha.

If I simply print(alpha), then it’s giving below output.

Tensor(“attention_1/ExpandDims:0”, shape=(None, 20, 1), dtype=float32)

But I want the values of alpha. Could you please help to find out the probability values?

reply to myself:

sorry,I saw the two part data was different

Hello, ,

The code runs for me, but the standard recurrent model usually outperforms the

attention model. Could this be just a version issue or is there a bigger problem?

My tensorflow is version 2.3.1 and my keras is version 2.4.0

Hi Ruan…What are you using as your measures of performance to compare the models?

Hello Dr.Brownlee

I want to build a mode like

…

Input => input_shape => (1, 7, 1) => (batch_size=1, n_steps=7, n_features=1)

“LSTM” => stateful=True

“”Attention”” => Error

“Conv1D” => 128, 3,

Flatten

Dense

…

I can make (without “Attention_layer”)

…

输入

LSTM

Conv1D

Flatten

Dense

…

model.compile(loss=’mse’,

optimizer='adam',

metrics=[tf.keras.metrics.RootMeanSquaredError(),

tf.keras.losses.MeanAbsoluteError(),

‘mean_absolute_percentage_error’])

but when, I add “Attention_layer”, I will have error…

/usr/local/lib/python3.7/dist-packages/keras/engine/input_spec.py in assert_input_compatibility(input_spec, inputs, layer_name)

226 ndim = x.shape.rank

227 if ndim is not None and ndim 228 raise ValueError(f’Input {input_index} of layer “{layer_name}” ‘

229 ‘is incompatible with the layer: ‘

230 f’expected min_ndim={spec.min_ndim}, ‘

ValueError: Input 0 of layer “Conv1D_01” is incompatible with the layer: expected min_ndim=3, found ndim=2. Full shape received: (1, 7)

How can/must I change “Attention_layer” that I can put it between LSTM & Conv1D?

非常感谢。

sorry I forgot something

I used 2 lstm

whit

return_sequences=True,

…

Input => input_shape => (1, 7, 1) => (batch_size=1, n_steps=7, n_features=1)

“LSTM” => stateful=True, return_sequences=True,

“LSTM” => stateful=True

“”Attention”” => Error

“Conv1D” => 128, 3,

Flatten

Dense

Dense

…

and, another question

How can we use “Conv2D”

…

Input => input_shape => (1, 7, 1) => (batch_size=1, n_steps=7, n_features=1)

“LSTM” => stateful=True, return_sequences=True,

“LSTM” => stateful=True

“”Attention”” => Error

“Conv2D” => 128, (3,3),

“Conv2D” => 64, (3,3),

Flatten

..

谢谢

Hi Arash…The following resource will help with understanding how to use Conv2D layers

https://pyimagesearch.com/2018/12/31/keras-conv2d-and-convolutional-layers/

Thanks a lot for last guiding.

But I don’t have problem with ‘Conv1D’ & ‘Conv2D’.

My problem is;

I don’t know how can I connect a “custom attention layer” to a Conv1D /Conv2D layer. when “custom attention layer” is before Conv1D /Conv2D layer and before of “custom attention layer” is a LSTM layer .

Even when I don’t use code of “custom attention layer” which is in this page, and I use “attention layer” that is in keras, like this

Input layer => LSTM layer => “custom attention layer” => Conv1D layer => …..

Again code can not compile without error.

I watched same my problem in this page,

https://stackoverflow.com/questions/69959445/connect-an-attention-block-to-the-conv1d-cnn-block-keras

But no one seems to have a solution to this problem.

Thanks for spending your time for reading this comment.

嗨

how to use different evaluation metrics to evaluate the results like MAE and etc?

Hello, thank you for the post.

i try to change this code and make it seq2seq for example but fail do you have any example for seq2seq?

Hi Mohammed…You may find the following resource of interest

https://machinelearning.org.cn/develop-encoder-decoder-model-sequence-sequence-prediction-keras/

i mean make it many to many 🙂

Thank you for your feedback Mohammed!

A beginner question

Inside the build() function of the custom attention layer, it has these two lines

self.W = self.add_weight(name=’attention_weight’, shape=(input_shape[-1], 1), initializer=’random_normal’, trainable=True)

self.b=self.add_weight(name=’attention_bias’, shape=(input_shape[1],1), initializer=’zeros’, trainable=True)

In the 2nd line, the shape param is (input_shape[1], 1). Why input_shape[1]? (vs. input_shape[-1] in the 1st line)

My understanding is that for RNN, input_shape = (time_steps, features).

So input_shape[-1] == input_shape[1]. But why the code wrote it differently among these two lines? What’s the reason behind?

Hi Patrick…Your understanding is correct. The alternative notation you mentioned should work as well. Let us know what you find with your implementation.

So the difference is not intentional, but inconsistency may create confusion to a learner. Thanks!

Thank you for your feedback Patrick!

你好,

快速反馈:虽然“Bahdanau 自注意力”的想法很有趣,但由于使用了斐波那契数列,这两种场景(有/无注意力)的训练结果完全没有意义。这些数字基本上呈指数级增长,因此将 1200 个数字线性缩放到 0…1 的范围是行不通的。看看你缩放后的 trainX,大多数数字都像 1E-200,转换为 tf.float32 后就是 0。

两个网络都没有学习到任何东西,mse 值完全是人为的,因此这个教程并没有显示出注意力是有帮助的。你可以在之前取对数,但那样的话,对数斐波那契数列就是线性的,因此是微不足道的。斐波那契数列在这里根本就不可用。

你好 Markus…感谢你的反馈!

我来这里也是为了给出完全相同的反馈并写类似的评论。我花了一天时间试图弄清楚我的代码哪里“不对劲”,最后才发现几乎所有的模型预测都相同,因此毫无用处。(模型没有在学习)。经过一番思考,我意识到当你将 (1, F_1200) 这样宽的范围转换为 (0, 1) 范围再转换回来时,你不可能期望有更好的结果。将第 7 章(太阳黑子)的相同数据集用于本章可能是一个更好的主意。我之后会自己尝试。不过,谢谢这本书。我学到了很多。

你好!谢谢你的教程。

在输入之后再应用 RNN 与将注意力放在 RNN 之后,有什么区别?

你好 Filipa…我建议你尝试这两种方法。让我们知道你的模型的表现如何。

你好 James,感谢你的帖子。请问这篇帖子中的注意力层与 2017 年帖子中的注意力层(Class AttentionDecoder(Recurrent):)有什么区别:https://machinelearning.org.cn/encoder-decoder-attention-sequence-to-sequence-prediction-keras/?

我有点困惑,这篇帖子中的注意力是放在编码器和解码器层之间的一个层,而 2017 年帖子中的代码,注意力层是与解码器层结合在一起的?谢谢。

在注意力类的调用方法中,对应的 Q、K、V 是什么?

你好 J…以下资源可能对您有帮助。

https://medium.com/analytics-vidhya/understanding-q-k-v-in-transformer-self-attention-9a5eddaa5960

这是哪种类型的注意力?

你好 Eman…以下资源可能有助于您了解注意力在深度学习中的作用。所介绍的概念构成了像 ChatGPT 这样的大型语言模型的基础。

https://machinelearning.org.cn/transformer-models-with-attention/