如果您在 GitHub 上查看过 Keras 模型,您可能已经注意到在 Keras 中创建模型有几种不同的方法。有一种是 Sequential 模型,它允许您在单行代码中定义整个模型,通常会添加一些换行以提高可读性。然后,还有函数式接口,它允许更复杂的模型架构,还有一个是 Model 子类,它有助于提高可重用性。本文将探讨在 Keras 中创建模型的不同方法,以及它们的优缺点。这将为您提供在 Keras 中构建自己的机器学习模型所需的知识。

完成本教程后,您将学到:

- Keras 提供的构建模型的不同方法

- 如何使用 Sequential 类、函数式接口以及继承 keras.Model 来构建 Keras 模型

- 何时使用不同的 Keras 模型创建方法

让我们开始吧!

在 Keras 中构建机器学习模型的三个方法

照片由 Mike Szczepanski 拍摄。保留部分权利。

概述

本教程分为三个部分,涵盖了在 Keras 中构建机器学习模型的不同方法

- 使用 Sequential 类

- 使用 Keras 的函数式接口

- 继承 keras.Model

使用 Sequential 类

Sequential 模型顾名思义。它由一系列层组成,一层接一层。根据 Keras 文档,

“Sequential 模型适用于简单的层堆叠,其中每层只有一个输入张量和一个输出张量。”

它是开始构建 Keras 模型的一种简单易用的方法。首先,导入 TensorFlow,然后导入 Sequential 模型

|

1 2 |

import tensorflow as tf from tensorflow.keras import Sequential |

然后,您可以通过堆叠各种层来开始构建您的机器学习模型。在此示例中,让我们使用经典的 CIFAR-10 图像数据集作为输入来构建 LeNet5 模型

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

from tensorflow.keras.layers import Dense, Input, Flatten, Conv2D, MaxPool2D model = Sequential([ Input(shape=(32,32,3,)), Conv2D(filters=6, kernel_size=(5,5), padding="same", activation="relu"), MaxPool2D(pool_size=(2,2)), Conv2D(filters=16, kernel_size=(5,5), padding="same", activation="relu"), MaxPool2D(pool_size=(2, 2)), Conv2D(filters=120, kernel_size=(5,5), padding="same", activation="relu"), Flatten(), Dense(units=84, activation="relu"), Dense(units=10, activation="softmax"), ]) print (model.summary()) |

您会注意到,您只是将模型应包含的层数组传递给了 Sequential 模型构造函数。查看 model.summary(),您可以看到模型的架构。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

_________________________________________________________________ 层 (类型) 输出形状 参数数量 # ================================================================= conv2d_3 (Conv2D) (None, 32, 32, 6) 456 max_pooling2d_2 (MaxPooling (None, 16, 16, 6) 0 2D) conv2d_4 (Conv2D) (None, 16, 16, 16) 2416 max_pooling2d_3 (MaxPooling (None, 8, 8, 16) 0 2D) conv2d_5 (Conv2D) (None, 8, 8, 120) 48120 flatten_1 (Flatten) (None, 7680) 0 dense_2 (Dense) (None, 84) 645204 dense_3 (Dense) (None, 10) 850 ================================================================= 总参数数量: 697,046 可训练参数数量: 697,046 不可训练参数: 0 _________________________________________________________________ |

为了测试模型,让我们加载 CIFAR-10 数据集并运行 model.compile 和 model.fit

|

1 2 3 4 5 6 |

from tensorflow import keras (trainX, trainY), (testX, testY) = keras.datasets.cifar10.load_data() model.compile(optimizer="adam", loss=tf.keras.losses.SparseCategoricalCrossentropy(), metrics="acc") history = model.fit(x=trainX, y=trainY, batch_size=256, epochs=10, validation_data=(testX, testY)) |

这将产生如下输出:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

Epoch 1/10 196/196 [==============================] - 13秒 10毫秒/步 - loss: 2.7669 - acc: 0.3648 - val_loss: 1.4869 - val_acc: 0.4713 Epoch 2/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 1.3883 - acc: 0.5097 - val_loss: 1.3654 - val_acc: 0.5205 Epoch 3/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 1.2239 - acc: 0.5694 - val_loss: 1.2908 - val_acc: 0.5472 Epoch 4/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 1.1020 - acc: 0.6120 - val_loss: 1.2640 - val_acc: 0.5555 Epoch 5/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 0.9931 - acc: 0.6498 - val_loss: 1.2850 - val_acc: 0.5555 Epoch 6/10 196/196 [==============================] - 2秒 9毫秒/步 - loss: 0.8888 - acc: 0.6903 - val_loss: 1.3150 - val_acc: 0.5646 Epoch 7/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 0.7882 - acc: 0.7229 - val_loss: 1.4273 - val_acc: 0.5426 Epoch 8/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 0.6915 - acc: 0.7582 - val_loss: 1.4574 - val_acc: 0.5604 Epoch 9/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 0.5934 - acc: 0.7931 - val_loss: 1.5304 - val_acc: 0.5631 Epoch 10/10 196/196 [==============================] - 2秒 8毫秒/步 - loss: 0.5113 - acc: 0.8214 - val_loss: 1.6355 - val_acc: 0.5512 |

对于初次尝试的模型来说,这已经相当不错了。将 LeNet5 使用 Sequential 模型的代码整合起来,您将得到:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import tensorflow as tf from tensorflow.keras import Sequential from tensorflow.keras.layers import Dense, Input, Flatten, Conv2D, MaxPool2D (trainX, trainY), (testX, testY) = keras.datasets.cifar10.load_data() model = Sequential([ Input(shape=(32,32,3,)), Conv2D(filters=6, kernel_size=(5,5), padding="same", activation="relu"), MaxPool2D(pool_size=(2,2)), Conv2D(filters=16, kernel_size=(5,5), padding="same", activation="relu"), MaxPool2D(pool_size=(2, 2)), Conv2D(filters=120, kernel_size=(5,5), padding="same", activation="relu"), Flatten(), Dense(units=84, activation="relu"), Dense(units=10, activation="softmax"), ]) print (model.summary()) model.compile(optimizer="adam", loss=tf.keras.losses.SparseCategoricalCrossentropy(), metrics="acc") history = model.fit(x=trainX, y=trainY, batch_size=256, epochs=10, validation_data=(testX, testY)) |

现在,让我们看看构建 Keras 模型的其他方法可以做什么,首先是函数式接口!

使用 Keras 的函数式接口

您将探索的下一个构建 Keras 模型的方法是使用 Keras 的函数式接口。函数式接口将层用作函数,它们接收一个张量并输出一个张量。函数式接口是表示 Keras 模型的一种更灵活的方式,因为您不受限于只能创建堆叠在一起的层组成的顺序模型。相反,您可以构建分支成多个路径、具有多个输入的模型等。

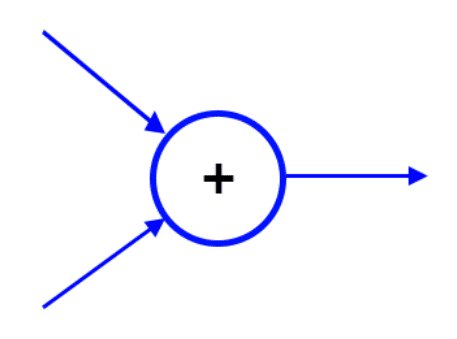

考虑一个 Add 层,它接收来自两个或多个路径的输入并将张量相加。

由于无法通过顺序堆叠层来表示这种情况,因此您无法使用 Sequential 对象来定义它。这正是 Keras 函数式接口发挥作用的地方。您可以这样定义一个带有两个输入张量的 Add 层:

|

1 2 |

from tensorflow.keras.layers import Add add_layer = Add()([layer1, layer2]) |

现在您已经看到了函数式接口的简短示例,让我们来看看使用函数式接口时,您通过实例化 Sequential 类定义的 LeNet5 模型会是什么样子。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

import tensorflow as tf from tensorflow.keras.layers import Dense, Input, Flatten, Conv2D, MaxPool2D from tensorflow.keras.models import Model input_layer = Input(shape=(32,32,3,)) x = Conv2D(filters=6, kernel_size=(5,5), padding="same", activation="relu")(input_layer) x = MaxPool2D(pool_size=(2,2))(x) x = Conv2D(filters=16, kernel_size=(5,5), padding="same", activation="relu")(x) x = MaxPool2D(pool_size=(2, 2))(x) x = Conv2D(filters=120, kernel_size=(5,5), padding="same", activation="relu")(x) x = Flatten()(x) x = Dense(units=84, activation="relu")(x) x = Dense(units=10, activation="softmax")(x) model = Model(inputs=input_layer, outputs=x) print(model.summary()) |

查看模型摘要

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

_________________________________________________________________ 层 (类型) 输出形状 参数数量 # ================================================================= input_2 (InputLayer) [(None, 32, 32, 3)] 0 conv2d_6 (Conv2D) (None, 32, 32, 6) 456 max_pooling2d_2 (MaxPooling (None, 16, 16, 6) 0 2D) conv2d_7 (Conv2D) (None, 16, 16, 16) 2416 max_pooling2d_3 (MaxPooling (None, 8, 8, 16) 0 2D) conv2d_8 (Conv2D) (None, 8, 8, 120) 48120 flatten_2 (Flatten) (None, 7680) 0 dense_4 (Dense) (None, 84) 645204 dense_5 (Dense) (None, 10) 850 ================================================================= 总参数数量: 697,046 可训练参数数量: 697,046 不可训练参数: 0 _________________________________________________________________ |

正如你所见,你使用函数式接口或Sequential类实现的两种LeNet5模型的架构是相同的。

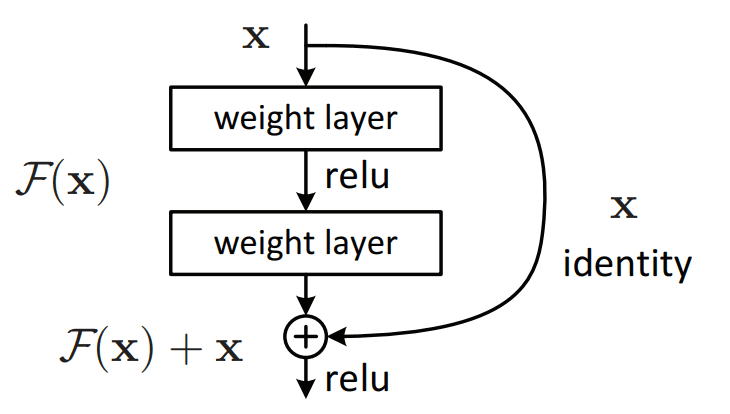

现在你已经了解了如何使用Keras的函数式接口,让我们来看一个可以使用函数式接口实现但不能用Sequential类实现的模型架构。本例中,我们将看残差块,它在ResNet中被提出。从视觉上看,残差块如下所示:

你可以看到,使用Sequential类定义的模型由于跳跃连接(skip connection)而无法构建这样的块,这使得该块无法表示为简单的层堆栈。使用函数式接口是定义ResNet块的一种方法。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

def residual_block(x, filters): # 存储输入张量以便稍后作为恒等映射相加 identity = x # 改变步长(strides)以模仿池化层(需要查看是先连接还是后连接到该层) x = Conv2D(filters = filters, kernel_size=(3, 3), strides = (1, 1), padding="same")(x) x = BatchNormalization()(x) x = relu(x) x = Conv2D(filters = filters, kernel_size=(3, 3), padding="same")(x) x = BatchNormalization()(x) x = Add()([identity, x]) x = relu(x) return x |

然后,您可以使用函数式接口通过这些残差块构建一个简单的网络。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

input_layer = Input(shape=(32,32,3,)) x = Conv2D(filters=32, kernel_size=(3, 3), padding="same", activation="relu")(input_layer) x = residual_block(x, 32) x = Conv2D(filters=64, kernel_size=(3, 3), strides=(2, 2), padding="same", activation="relu")(x) x = residual_block(x, 64) x = Conv2D(filters=128, kernel_size=(3, 3), strides=(2, 2), padding="same", activation="relu")(x) x = residual_block(x, 128) x = Flatten()(x) x = Dense(units=84, activation="relu")(x) x = Dense(units=10, activation="softmax")(x) model = Model(inputs=input_layer, outputs = x) print(model.summary()) model.compile(optimizer="adam", loss=tf.keras.losses.SparseCategoricalCrossentropy(), metrics="acc") history = model.fit(x=trainX, y=trainY, batch_size=256, epochs=10, validation_data=(testX, testY)) |

运行此代码并查看模型摘要和训练结果。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 |

__________________________________________________________________________________________________ 层 (类型) 输出 形状 参数 # 连接到 ================================================================================================== input_1 (InputLayer) [(None, 32, 32, 3)] 0 [] conv2d (Conv2D) (None, 32, 32, 32) 896 ['input_1[0][0]'] conv2d_1 (Conv2D) (None, 32, 32, 32) 9248 ['conv2d[0][0]'] batch_normalization (BatchNorm (None, 32, 32, 32) 128 ['conv2d_1[0][0]'] alization) tf.nn.relu (TFOpLambda) (None, 32, 32, 32) 0 ['batch_normalization[0][0]'] conv2d_2 (Conv2D) (None, 32, 32, 32) 9248 ['tf.nn.relu[0][0]'] batch_normalization_1 (BatchNo (None, 32, 32, 32) 128 ['conv2d_2[0][0]'] rmalization) add (Add) (None, 32, 32, 32) 0 ['conv2d[0][0]', 'batch_normalization_1[0][0]'] tf.nn.relu_1 (TFOpLambda) (None, 32, 32, 32) 0 ['add[0][0]'] conv2d_3 (Conv2D) (None, 16, 16, 64) 18496 ['tf.nn.relu_1[0][0]'] conv2d_4 (Conv2D) (None, 16, 16, 64) 36928 ['conv2d_3[0][0]'] batch_normalization_2 (BatchNo (None, 16, 16, 64) 256 ['conv2d_4[0][0]'] rmalization) tf.nn.relu_2 (TFOpLambda) (None, 16, 16, 64) 0 ['batch_normalization_2[0][0]'] conv2d_5 (Conv2D) (None, 16, 16, 64) 36928 ['tf.nn.relu_2[0][0]'] batch_normalization_3 (BatchNo (None, 16, 16, 64) 256 ['conv2d_5[0][0]'] rmalization) add_1 (Add) (None, 16, 16, 64) 0 ['conv2d_3[0][0]', 'batch_normalization_3[0][0]'] tf.nn.relu_3 (TFOpLambda) (None, 16, 16, 64) 0 ['add_1[0][0]'] conv2d_6 (Conv2D) (None, 8, 8, 128) 73856 ['tf.nn.relu_3[0][0]'] conv2d_7 (Conv2D) (None, 8, 8, 128) 147584 ['conv2d_6[0][0]'] batch_normalization_4 (BatchNo (None, 8, 8, 128) 512 ['conv2d_7[0][0]'] rmalization) tf.nn.relu_4 (TFOpLambda) (None, 8, 8, 128) 0 ['batch_normalization_4[0][0]'] conv2d_8 (Conv2D) (None, 8, 8, 128) 147584 ['tf.nn.relu_4[0][0]'] batch_normalization_5 (BatchNo (None, 8, 8, 128) 512 ['conv2d_8[0][0]'] rmalization) add_2 (Add) (None, 8, 8, 128) 0 ['conv2d_6[0][0]', 'batch_normalization_5[0][0]'] tf.nn.relu_5 (TFOpLambda) (None, 8, 8, 128) 0 ['add_2[0][0]'] flatten (Flatten) (None, 8192) 0 ['tf.nn.relu_5[0][0]'] dense (Dense) (None, 84) 688212 ['flatten[0][0]'] dense_1 (Dense) (None, 10) 850 ['dense[0][0]'] ================================================================================================== 总参数数: 1,171,622 可训练参数数: 1,170,726 不可训练参数数: 896 __________________________________________________________________________________________________ 无 Epoch 1/10 196/196 [==============================] - 21 46毫秒/步 - loss: 3.4463 acc: 0.3635 - val_loss: 1.8015 - val_acc: 0.3459 Epoch 2/10 196/196 [==============================] - 8秒 43毫秒/步 - loss: 1.3267 - acc: 0.5200 - val_loss: 1.3895 - val_acc: 0.5069 Epoch 3/10 196/196 [==============================] - 8秒 43毫秒/步 - loss: 1.1095 - acc: 0.6062 - val_loss: 1.2008 - val_acc: 0.5651 Epoch 4/10 196/196 [==============================] - 9秒 44毫秒/步 - loss: 0.9618 - acc: 0.6585 - val_loss: 1.5411 - val_acc: 0.5226 Epoch 5/10 196/196 [==============================] - 9秒 44毫秒/步 - loss: 0.8656 - acc: 0.6968 - val_loss: 1.1012 - val_acc: 0.6234 Epoch 6/10 196/196 [==============================] - 8秒 43毫秒/步 - loss: 0.7622 - acc: 0.7361 - val_loss: 1.1355 - val_acc: 0.6168 Epoch 7/10 196/196 [==============================] - 9秒 44毫秒/步 - loss: 0.6801 - acc: 0.7602 - val_loss: 1.1561 - val_acc: 0.6187 Epoch 8/10 196/196 [==============================] - 8秒 43毫秒/步 - loss: 0.6106 - acc: 0.7905 - val_loss: 1.1100 - val_acc: 0.6401 Epoch 9/10 196/196 [==============================] - 9秒 43毫秒/步 - loss: 0.5367 - acc: 0.8146 - val_loss: 1.2989 - val_acc: 0.6058 Epoch 10/10 196/196 [==============================] - 9秒 47毫秒/步 - loss: 0.4776 - acc: 0.8348 - val_loss: 1.0098 - val_acc: 0.6757 |

And combining the code for our simple network using residual blocks

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

import tensorflow as tf from tensorflow import keras from keras.layers import Input, Conv2D, BatchNormalization, Add, MaxPool2D, Flatten, Dense from keras.activations import relu from tensorflow.keras.models import Model def residual_block(x, filters): # 存储输入张量以便稍后作为恒等映射相加 identity = x # 改变步长(strides)以模仿池化层(需要查看是先连接还是后连接到该层) x = Conv2D(filters = filters, kernel_size=(3, 3), strides = (1, 1), padding="same")(x) x = BatchNormalization()(x) x = relu(x) x = Conv2D(filters = filters, kernel_size=(3, 3), padding="same")(x) x = BatchNormalization()(x) x = Add()([identity, x]) x = relu(x) return x (trainX, trainY), (testX, testY) = keras.datasets.cifar10.load_data() input_layer = Input(shape=(32,32,3,)) x = Conv2D(filters=32, kernel_size=(3, 3), padding="same", activation="relu")(input_layer) x = residual_block(x, 32) x = Conv2D(filters=64, kernel_size=(3, 3), strides=(2, 2), padding="same", activation="relu")(x) x = residual_block(x, 64) x = Conv2D(filters=128, kernel_size=(3, 3), strides=(2, 2), padding="same", activation="relu")(x) x = residual_block(x, 128) x = Flatten()(x) x = Dense(units=84, activation="relu")(x) x = Dense(units=10, activation="softmax")(x) model = Model(inputs=input_layer, outputs = x) print(model.summary()) model.compile(optimizer="adam", loss=tf.keras.losses.SparseCategoricalCrossentropy(), metrics="acc") history = model.fit(x=trainX, y=trainY, batch_size=256, epochs=10, validation_data=(testX, testY)) |

继承 keras.Model

Keras also provides an object-oriented approach to creating models, which helps with reusability and allows you to represent the models you want to create as classes. This representation might be more intuitive since you can think about models as a set of layers strung together to form your network.

To begin subclassing keras.Model, you first need to import it

|

1 |

from tensorflow.keras.models import Model |

Then, you can start subclassing keras.Model. First, you need to build the layers that you want to use in your method calls since you only want to instantiate these layers once instead of each time you call your model. To keep in line with previous examples, let’s build a LeNet5 model here as well.

|

1 2 3 4 5 6 7 8 9 10 11 |

class LeNet5(tf.keras.Model): def __init__(self): super(LeNet5, self).__init__() #creating layers in initializer self.conv1 = Conv2D(filters=6, kernel_size=(5,5), padding="same", activation="relu") self.max_pool2x2 = MaxPool2D(pool_size=(2,2)) self.conv2 = Conv2D(filters=16, kernel_size=(5,5), padding="same", activation="relu") self.conv3 = Conv2D(filters=120, kernel_size=(5,5), padding="same", activation="relu") self.flatten = Flatten() self.fc2 = Dense(units=84, activation="relu") self.fc3 = Dense(units=10, activation="softmax") |

Then, override the call method to define what happens when the model is called. You override it with your model, which uses the layers you have built in the initializer.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

def call(self, input_tensor): # don't create layers here, need to create the layers in initializer, # otherwise you will get the tf.Variable can only be created once error conv1 = self.conv1(input_tensor) maxpool1 = self.max_pool2x2(conv1) conv2 = self.conv2(maxpool1) maxpool2 = self.max_pool2x2(conv2) conv3 = self.conv3(maxpool2) flatten = self.flatten(conv3) fc2 = self.fc2(flatten) fc3 = self.fc3(fc2) return fc3 |

It is important to have all the layers created at the class constructor, not inside the call() method. This is because the call() method will be invoked multiple times with different input tensors. But you want to use the same layer objects in each call to optimize their weight. You can then instantiate your new LeNet5 class and use it as part of a model

|

1 2 3 4 5 6 |

input_layer = Input(shape=(32,32,3,)) x = LeNet5()(input_layer) model = Model(inputs=input_layer, outputs=x) print(model.summary(expand_nested=True)) |

And you can see that the model has the same number of parameters as the previous two versions of LeNet5 that were built previously and has the same structure within it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

_________________________________________________________________ 层 (类型) 输出形状 参数数量 # ================================================================= input_1 (InputLayer) [(None, 32, 32, 3)] 0 le_net5 (LeNet5) (None, 10) 697046 |¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯| | conv2d (Conv2D) multiple 456 | | | | max_pooling2d (MaxPooling2D multiple 0 | | ) | | | | conv2d_1 (Conv2D) multiple 2416 | | | | conv2d_2 (Conv2D) multiple 48120 | | | | flatten (Flatten) multiple 0 | | | | dense (Dense) multiple 645204 | | | | dense_1 (Dense) multiple 850 | ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ================================================================= 总参数数量: 697,046 可训练参数数量: 697,046 不可训练参数: 0 _________________________________________________________________ |

Combining all the code to create your LeNet5 subclass of keras.Model

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

import tensorflow as tf from tensorflow.keras.layers import Dense, Input, Flatten, Conv2D, MaxPool2D from tensorflow.keras.models import Model class LeNet5(tf.keras.Model): def __init__(self): super(LeNet5, self).__init__() #creating layers in initializer self.conv1 = Conv2D(filters=6, kernel_size=(5,5), padding="same", activation="relu") self.max_pool2x2 = MaxPool2D(pool_size=(2,2)) self.conv2 = Conv2D(filters=16, kernel_size=(5,5), padding="same", activation="relu") self.conv3 = Conv2D(filters=120, kernel_size=(5,5), padding="same", activation="relu") self.flatten = Flatten() self.fc2 = Dense(units=84, activation="relu") self.fc3=Dense(units=10, activation="softmax") def call(self, input_tensor): #don't add layers here, need to create the layers in initializer, otherwise you will get the tf.Variable can only be created once error x = self.conv1(input_tensor) x = self.max_pool2x2(x) x = self.conv2(x) x = self.max_pool2x2(x) x = self.conv3(x) x = self.flatten(x) x = self.fc2(x) x = self.fc3(x) return x input_layer = Input(shape=(32,32,3,)) x = LeNet5()(input_layer) model = Model(inputs=input_layer, outputs=x) print(model.summary(expand_nested=True)) |

进一步阅读

如果您想深入了解,本节提供了更多关于该主题的资源。

论文

- Deep Residual Learning for Image Recognition (the ResNet paper)

API

总结

In this post, you have seen three different ways to create models in Keras. In particular, this includes using the Sequential class, functional interface, and subclassing keras.Model. You have also seen examples of the same LeNet5 model being built using the different methods and a use case that can be done using the functional interface but not with the Sequential class.

具体来说,你学到了:

- Keras 提供的构建模型的不同方法

- 如何使用 Sequential 类、函数式接口以及继承 keras.Model 来构建 Keras 模型

- 何时使用不同的 Keras 模型创建方法

嗨,Jason,

Is there any way to make Sequential models with lots of layers? For example, it is very difficult to add 50 layers in a keras sequential model by hand? I was thinking about to make a keras model with 50 layers with 4 neours in each layer. Is there any recommendation to make it easier instead of writing 50 lines Dense layer in python?

提前感谢,

Faraz

nodes = [16, 32, 16, 2, 1]

for i in nodes

model.add(Dense(i))